Tips For Selecting The Best Web Scraping Software for Your Data Needs

Data scraping online can be a great way to gather information on your competitors to see what they’re doing correctly and how you can work it into your business model. The problem comes when you realize that no one wants to be scrapped, and many anti-scraping countermeasures are available. Efficiency can also be a concern since not all scrapers work the same. With this in mind, review several tips for choosing the best scraping software for your data needs.

- Affordability

Before you begin to consider which web scraping software you would like to use, you must first realize the costs involved. Generally speaking, using a scraper will cost you in two ways: computer resources and money.

If you choose to go with a licensed scraper installed on your hardware, it will be significantly cheaper than subscribing to a cloud-based model and typically only cost a one-time fee. However, this method will use more resources, so you must ensure your hardware can handle it.

In either case, make sure you have a budget when shopping for scarpers. Also, remember that if you choose a locally installed scraper, your PC/Mac must be running when it is permanently active. This won’t affect your energy bill too much in most cases, but it is something to consider.

- Getting Around Anti-Scraper Technology

As web scraper technology has evolved over the years, so has the technology for countering it. This includes obvious countermeasures such as CAPTCHA fields and IP blocking if the server discovers your scraper isn’t human. However, there are much more subtle anti-scraper techniques, such as shadow blocking or when the server feeds your scraper false data on purpose. Since these are legitimate problems, you need a programmed scraper to deal with the latest anti-scraper methods it will encounter.

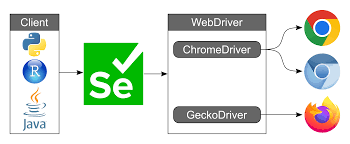

- Cross-Platform and IP Cycling

Since scraping works through a browser extension in most cases, it can be a good idea to look for one with cross-platform performance. This is important because browser cycling is one of the main ways that scrapers mimic human behaviour to fool anti-scraper countermeasures and get past them. Another way that scrapers appear more human is with IP proxy cycling. This will make the scraper appear to be more than one person so that it doesn’t appear machine-like in its behaviour. So, circumventing an IP ban is also possible using this method.

- Usage Limitations and Storage

If you choose a cloud-based scraper, you will have many limitations. This includes the number of websites you can scrap, how much data you can store, and the number of scraping requests you can make. On the other hand, a locally installed scraper has none of these limitations but will be subject to data storage needs. Data scrapers collect a lot of data; you will need somewhere to store it. This issue can be solved using an external hard drive if your main hard drive is running out of space.

- Synchronous vs Asynchronous

Synchronous scrapes can only scrap one website at a time. This means they need to accomplish something useful while waiting for the website to reply. On the other hand, asynchronous scrapers can scrape multiple websites at a time, so there is virtually no downtime. The drawback here is that asynchronous scrapers are more resource intensive. This is fine if your scraper is cloud-based since the cloud handles the entire process. However, suppose you’re using a locally installed scraper. In that case, you will need to ensure that your computer hardware can handle the resource consumption of an asynchronous scraper if you want to use one.

Picking a Scraper That Meets Your Needs

Ultimately, many factors go into choosing a scraper for your data needs. The thing is, you first need to identify what your data needs are in the first place. This will give you a better idea of which scraper you need and how it will be used. If you can do that, you’ll be scraping data quickly.